The Birthplace of AI

An essay about the 1956 “Dartmouth workshop”

The Dartmouth Summer Research Project on Artificial Intelligence was a summer workshop widely considered to be the founding moment of artificial intelligence as a field of research. Held for eight weeks in Hanover, New Hampshire in 1956 the conference gathered 20 of the brightest minds in computer- and cognitive science for a workshop dedicated to the conjecture:

"..that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer." - A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence (McCarthy et al, 1955)

Motivation

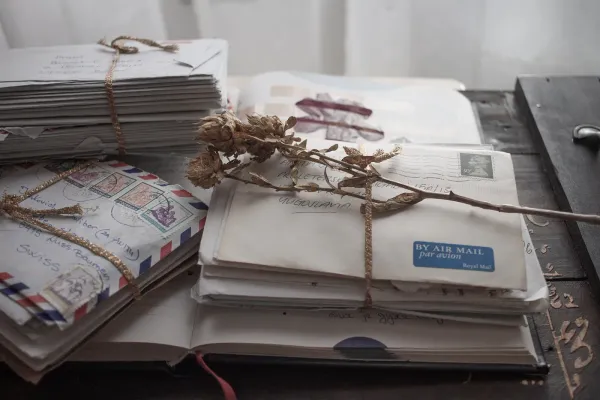

Prior to the conference, Assistant Professor of Mathematics at Dartmouth John McCarthy and Claude Shannon from MIT had been co-editing the then forthcoming Volume 34 of the Annals of Mathematics Studies journal, on Automata Studies (Shannon & McCarthy, 1956). Automata are self-operating machines designed to automatically follow predetermined sequences of operations or respond to predetermined instructions. As engineering mechanisms they appear in a wide variety of everyday applications such as mechanical clocks where a hammer strikes a bell or a cuckoo appears to sing.

According to James Moor (2006), McCarthy in particular had been disappointed in the submissions to the issue and their lacking focus on the possibilities of computers possessing intelligence beyond the rather trivial/simple behaviors of automata:

“At the time I believed if only we could get everyone who was interested in the subject together to devote time to it and avoid distractions, we could make real progress” — John McCarthy

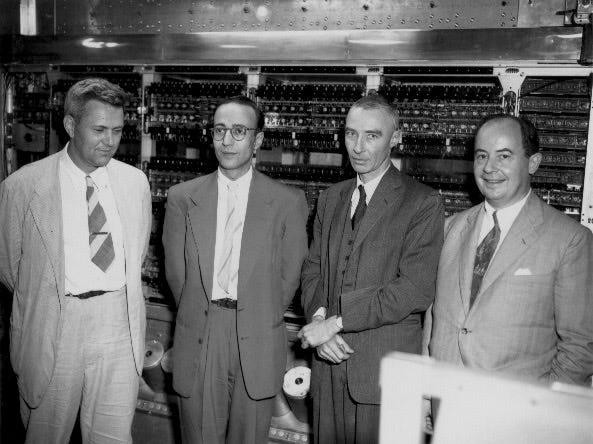

The initial group McCarthy had in mind included Marvin Minsky whom he had known since they were graduate students together at Fine Hall in the early 1950s. The two had talked about artificial intelligence then, and Minsky’s PhD dissertation in mathematics had been on neural nets (Moor, 2006) and the structure of the human brain (Nasar, 1998). They had both been hired at Bell Labs alongside Claude Shannon in 1952. There, McCarthy had learned that the much more senior Shannon too was interested in the field. Finally, McCarthy had run into Nathaniel Rochester at MIT when IBM was gifting them with a computer. He too showed interest in artificial intelligence (McCorduck, 1979), and so the four agreed to submit a proposal for a workshop/conference to the Rockefeller Foundation.

The Proposal

“We propose that a two-month, ten-man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire”

The written proposal to the Rockefeller Foundation was formulated by McCarthy at Dartmouth and Minsky, then at Harvard. They brought their proposal to the two senior faculty, Claude Shannon at Bell Labs and Nathaniel Rochester at IBM and got their support (Crevier, 1993). The proposal went as follows (McCarthy et al, 1955, reprinted in AI Magazine Volume 27, Number 4 pp. 12–14):

A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence (August 31st, 1955)We propose that a 2 month, 10 man study of artificial intelligence be carried out during the summer of 1956 at Dartmouth College in Hanover, New Hampshire. The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves. We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer. The following are some aspects of the artificial intelligence problem:1. Automatic Computers

If a machine can do a job, then an automatic calculator can be programmed to simulate the machine. The speeds and memory capacities of present computers may be insufficient to simulate many of the higher functions of the human brain, but the major obstacle is not lack of machine capacity, but our inability to write programs taking full advantage of what we have.2. How Can a Computer be Programmed to Use a Language

It may be speculated that a large part of human thought consists of manipulating words according to rules of reasoning and rules of conjecture. From this point of view, forming a generalization consists of admitting a new word and some rules whereby sentences containing it imply and are implied by others. This idea has never been very precisely formulated nor have examples been worked out.3. Neuron Nets

How can a set of (hypothetical) neurons be arranged so as to form concepts. Considerable theoretical and experimental work has been done on this problem by Uttley, Rashevsky and his group, Farley and Clark, Pitts and McCulloch, Minsky, Rochester and Holland, and others. Partial results have been obtained but the problem needs more theoretical work.4. Theory of the Size of a Calculation

If we are given a well-defined problem (one for which it is possible to test mechanically whether or not a proposed answer is a valid answer) one way of solving it is to try all possible answers in order. This method is inefficient, and to exclude it one must have some criterion for efficiency of calculation. Some consideration will show that to get a measure of the efficiency of a calculation it is necessary to have on hand a method of measuring the complexity of calculating devices which in turn can be done if one has a theory of the complexity of functions. Some partial results on this problem have been obtained by Shannon, and also by McCarthy.5. Self-improvement

Probably a truly intelligent machine will carry out activities which may best be described as self-improvement. Some schemes for doing this have been proposed and are worth further study. It seems likely that this question can be studied abstractly as well.6. Abstractions

A number of types of “abstraction” can be distinctly defined and several others less distinctly. A direct attempt to classify these and to describe machine methods of forming abstractions from sensory and other data would seem worthwhile.7. Randomness and Creativity

A fairly attractive and yet clearly incomplete conjecture is that the difference between creative thinking and unimaginative competent thinking lies in the injection of a some random- ness. The randomness must be guided by intuition to be efficient. In other words, the educated guess or the hunch include controlled randomness in otherwise orderly thinking.

Included with the proposal were short biographies of the “proposers”:

Claude E. Shannon, Mathematician, Bell Telephone Laboratories. Shannon developed the statistical theory of information, the applica- tion of propositional calculus to switching cir- cuits, and has results on the efficient synthesis of switching circuits, the design of machines that learn, cryptography, and the theory of Tur- ing machines. He and J. McCarthy are coedit- ing an Annals of Mathematics study on “The Theory of Automata”.Marvin L. Minsky, Harvard Junior Fellow in Mathematics and Neurology. Minsky has built a machine for simulating learning by nerve nets and has written a Princeton Ph.D thesis in mathematics entitled, “Neural Nets and the Brain Model Problem” which includes results in learning theory and the theory of random neural nets.Nathaniel Rochester, Manager of Information Research, IBM Corporation, Poughkeepsie, New York. Rochester was concerned with the development of radar for seven years and computing machinery for seven years. He and another engineer were jointly responsible for the design of the IBM Type 701 which is a large scale automatic computer in wide use today. He worked out some of the automatic programming techniques which are in wide use today and has been concerned with problems of how to get machines to do tasks which previously could be done only by people. He has also worked on simulation of nerve nets with particular emphasis on using computers to test theories in neurophysiology.John McCarthy, Assistant Professor of Mathematics, Dartmouth College. McCarthy has worked on a number of questions connected with the mathematical nature of the thought process including the theory of Turing ma- chines, the speed of computers, the relation of a brain model to its environment, and the use of languages by machines. Some results of this work are included in the forthcoming “Annals Study” edited by Shannon and McCarthy. McCarthy’s other work has been in the field of differential equations.

The entire proposal is available on Stanford’s website. Salaries for participants not supported by private institutions (such as Bell Labs and IBM) were estimated to $1,200 per person, $700 for two graduate students. Railway fare averaging $300 was to be provided to the eight participants not residing in New Hampshire. The Rockefeller Foundation granted $7,500 of the proposed budget of $13,500 towards the conference, which ran for roughly six to eight weeks (accounts differ) during the summer of 1956, starting around June 18th and running until August 17th.

Attendees

Although they came from a wide variety of backgrounds — mathematics, psychology, electrical engineering and more, the attendees at the 1956 Dartmouth conference shared a common defining belief, namely that the act of thinking is not something unique either to humans or indeed even biological beings. Rather, they believed that computation is a formally deducible phenomenon which can be understood in a scientific way and that the best nonhuman instrument for doing so is the digital computer (McCormack, 1979).

The four initial participants each invited people who shared their belief about this one properties of cognition. Among them were future Nobel laureates John F. Nash Jr (1928–2015) and Herbert A. Simon (1916–2001). The former was likely invited by Minsky, as the two had been in graduate school at Princeton together in the early 50s. Simon was likely either invited by McCarthy himself or by extension through Allen Newell, as the two had worked together at IBM in 1955. According to the notes made by Ray Solomonoff, twenty people would attend over the eight week period. They included:

Herbert A. Simon (1916–2001)

According to one of McCarthy’s communications in May of 1956, Herbert A. Simon was scheduled to attend for the first two weeks of the workshop. Simon, then a Professor of Administration at Carnegie Mellon (then Carnegie Tech) had up until that point been working on decision making problems (so-called “administrative behavior”) since receiving his Ph.D. in 1947 at the University of Chicago. He would go on to win the 1978 Nobel Memorial Prize in Economics for the work, and more broadly for “his pioneering research into the decision-making process within economic organizations”. At the time of the conference, he was collaborating with Allen Newell and Cliff Shaw on the computer language the Information Processing Language (IPL) and the pioneering computer programs Logic Theorist (1956) and General Problem Solver (1959). The former was the first program engineered to mimic the problem solving abilities of a human, and would go on to prove 38 of the first 52 theorems in Whitehead and Russell’sPrincipia Mathematica, even finding new and more elegant proofs than those proposed in 1912. The second was intended to work as a universal problem solving machine, which could solve any problem sufficiently formally expressed as a set of well-formed formulas. It would go on to evolve into the Soar architecture for artificial intelligence.

Allen Newell (1927–1992)

Simon’s collaborator Allen Newell also attended for the first two weeks. The two had first met at the RAND Corporation in 1952 and created the IPL programming language together in 1956. The language was the first to introduce list manipulation, property lists, higher-order functions, computation with symbols and virtual machines to digital computing languages. Newell was also the programmer who first introduced list processing, the application of means-ends analysis to general reasoning, and using heuristics to limit the search space of a program. A year before the conference, Newell has published a paper entitled “The Chess Machine: An Example of Dealing with a Complex Task by Adaptation”, which included a theoretical outline of

"..an imaginative design for a computer program to play chess in humanoid fashion, incorporating notions of goals, aspiration levels for terminating search, satisfying with "good enough" moves, multidimensional evaluation functions, the generation of subgoals to implement goals and something like best first search. Information about the board was to be was to be expressed symbolically in a language resembling the predicate calculus."- Excerpt, "Allen Newell" in Biographical Memoirs (1997) by Herbert A Simon.

Newell attributed the idea that lead to the paper to a “conversion experience” he had while in a seminar in 1954 listening to student of Norbert Wiener, Oliver Selfridge of Lincoln Laboratories describe “running a computer program that learned to recognize letters and other patterns” (Simon, 1997).

Oliver Selfridge (1926–2008)

Oliver Selfridge reportedly also attended the workshop for four weeks. Selfridge would go on to write important early papers on neural networks, pattern recognition and machine learning. His “Pandemonium paper” (1959) is considered a classic in artificial intelligence circles.

Julian Bigelow (1913–2003)

Pioneering computer engineer Julian Bigelow was also there. Bigelow had worked with Norbert Wiener on one the founding papers on cybernetics and was hired by John von Neumann to build one of the first digital computers, the IAS(or “the MANIAC”) at the Institute for Advanced study in 1946, on Wiener’s recommendation.

Other attendees included Ray Solomonoff, Trenchard More, Nat Rochester, W. Ross Ashby, W.S. McCulloch, Abraham Robinson, David Sayre, Arthur Samueland Kenneth R. Shoulders.

Outcomes

Somewhat ironically, the “intense and sustained two months of scientific exchange” envisioned by John McCarthy prior to the workshop never actually quite took place (McCormack, 1979):

“Anybody who was there was pretty stubborn about pursuing the ideas that he had before he came, nor was there, as far as I could see, any real exchange of ideas. People came for different periods of time. The idea was that everyone would agree to come for six weeks, and the people came for periods ranging from two days to the whole six weeks, so not everybody was there at once. It was a great disappointment to me because it really meant that we couldn’t have regular meetings.”

Some tangible outcomes can however still be deduced. First of all, the term artificial intelligence (AI) itself was first coined by McCarthy during the conference. Of important work directly related to the same period of time as the conference, McCarthy later emphasized the works of Newell, Shaw and Simon on the Information Processing Language (IPL) and their Logic Theory Machine (Moor, 2006). Among other related outcomes, one of the conference’s attendees, Arthur Samuel would go on to coin the term “machine learning” in 1959 and create the Samuel Checkers-playing program, one of the world’s first successful self-learning programs. Oliver Selfridge is now often referred to as “Father of Machine Perception” for his research into pattern-recognition. Minsky would go on to win the Turing award in 1969 for his “central role in creating, shaping, promoting and advancing the field of artificial intelligence”. Newell and Simon would go on to win the same award in 1975 for their contributions to “artificial intelligence and the psychology of human cognition”.

AI@50: The Next 50 Years

Funded by the Dean of Faculty, the Office of the Provost at Dartmouth, DARPA and private donors a conference commemorating the 50 year anniversary of the first gathering in 1956 took place between July 13th-15th of 2006. The conference stated three objectives:

- To celebrate the 1956 gathering;

- To assess how far AI has progressed; and

- To project where AI is and should be going;

An article summarizing the events at the anniversary conference was later written by James Moor and published in AI Magazine Vol. 27 Number 4 (2006). It is available here.